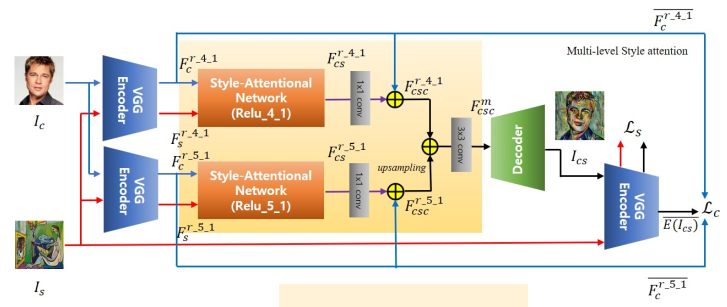

Image Style transfer is a neural network algorithm that copies the style of an existing image into another image while preserving the image’s content, there have been multiple algorithms to perform style transfer one of which is using style attentional networks. attention is a modern algorithm that helps neural networks distinguish important parts of speech or an image, in this instance we use attention to identify the important parts of image features to properly apply an overall style rather than just a mask and to conserve parts of the content that are deemed important.

Workflow

the general workflow of the application is the API will receive the content and style images, run them through the model and return the resulting image as base64 encoded.

Components

Model

The model uses a modified version of style attentional networks, with the main changes being the identity loss coefficients, the architecture consists of 3 separate models, an encoder, a style network and a decoder, the encoder used here is VGG-16.

API

The API is implemented using flask and flasgger for openAPI documentations, FloydHub is used to deploy the API in the cloud for general availability.

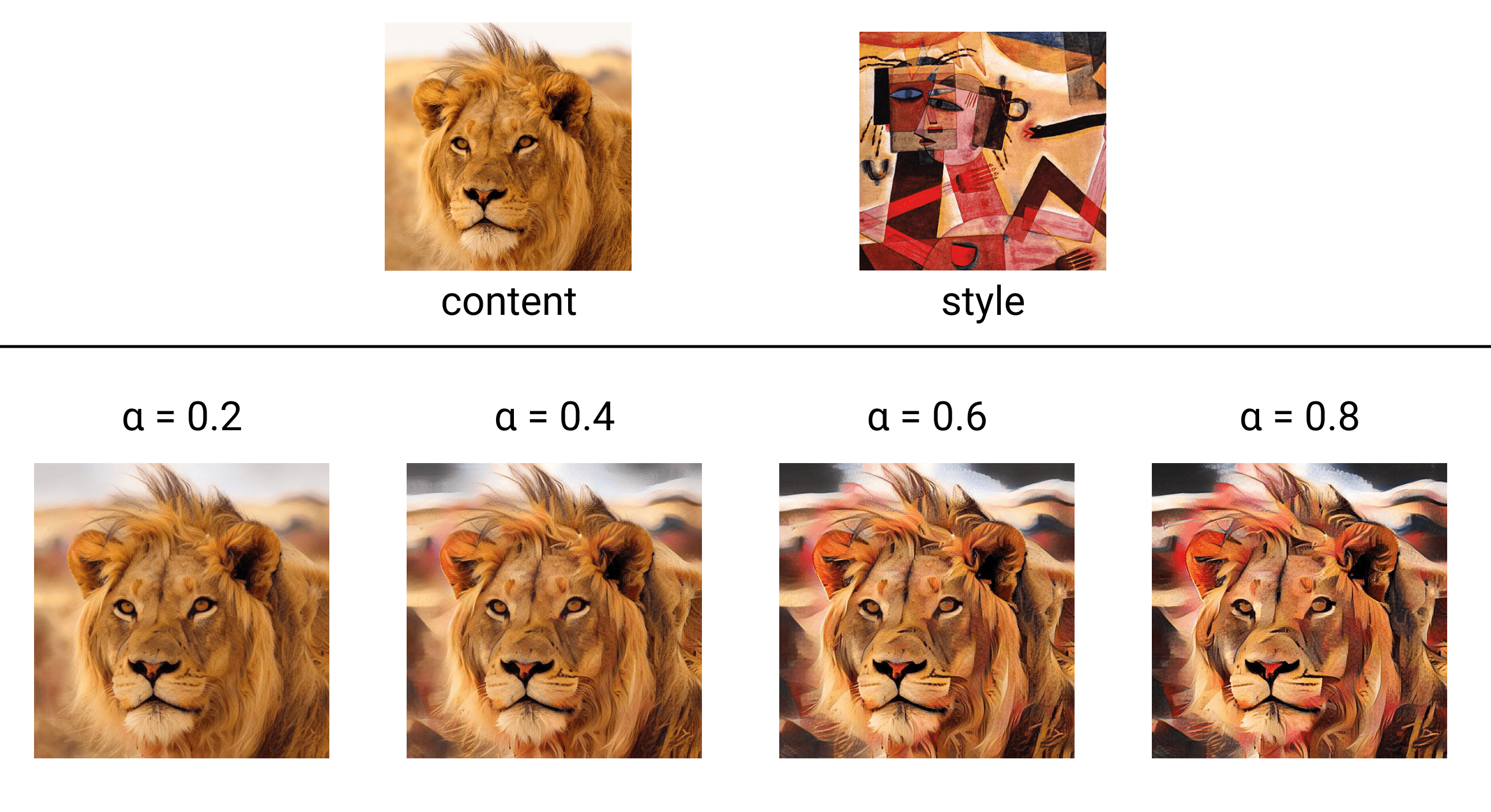

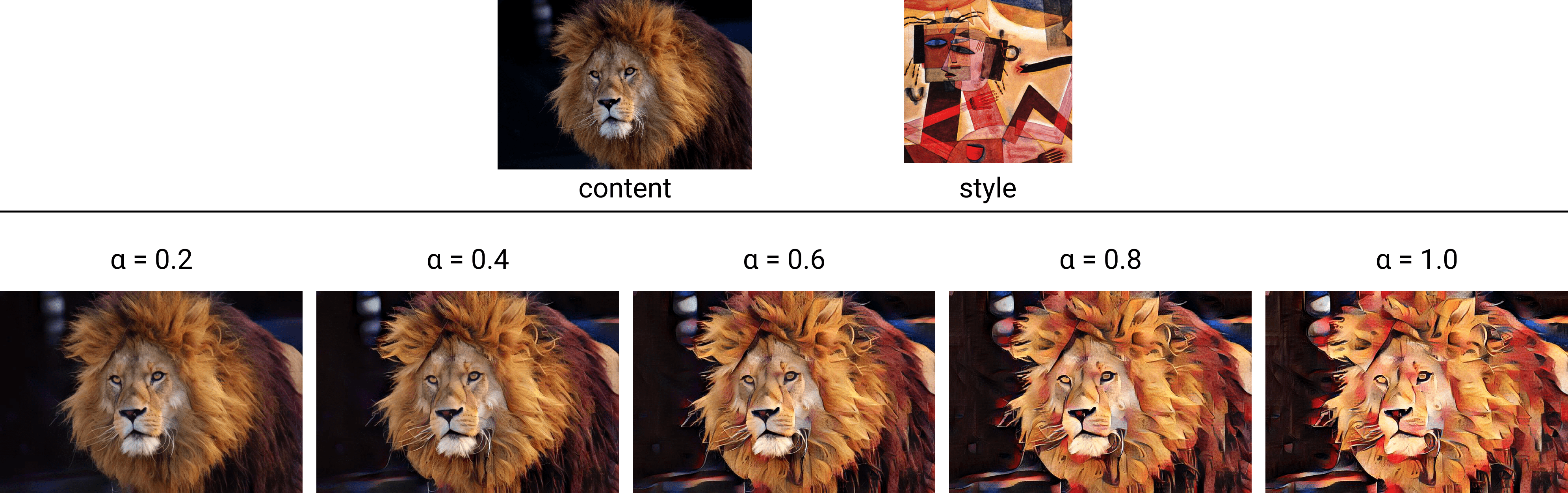

- Allow changing style-content tradeoff during inference

- Accept style images as a base64 encoded image or a path to a style in the project S3 bucket

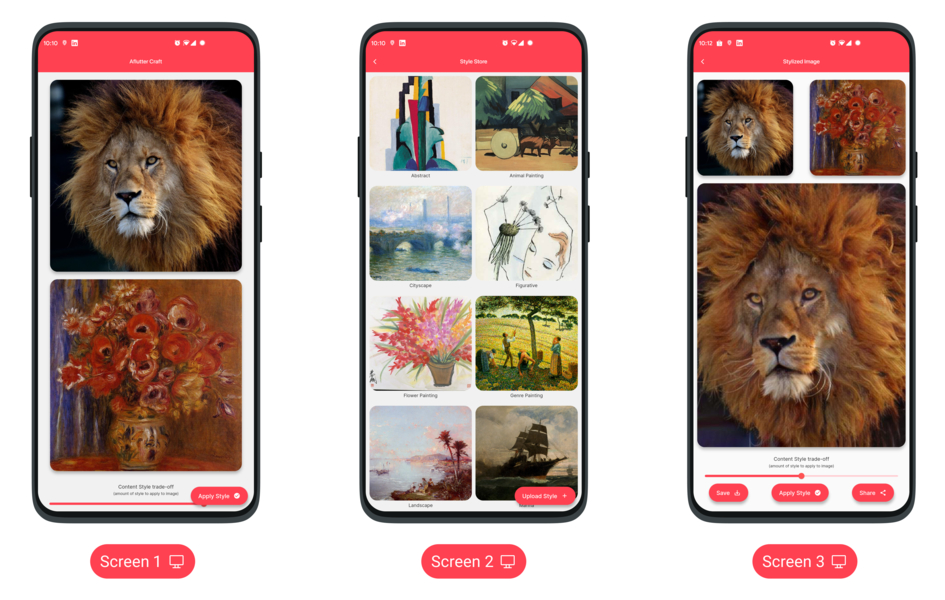

Cross Platform Application

The application is build using flutter with support for:

- Android

- IOS

- Windows

- Web

- MacOS

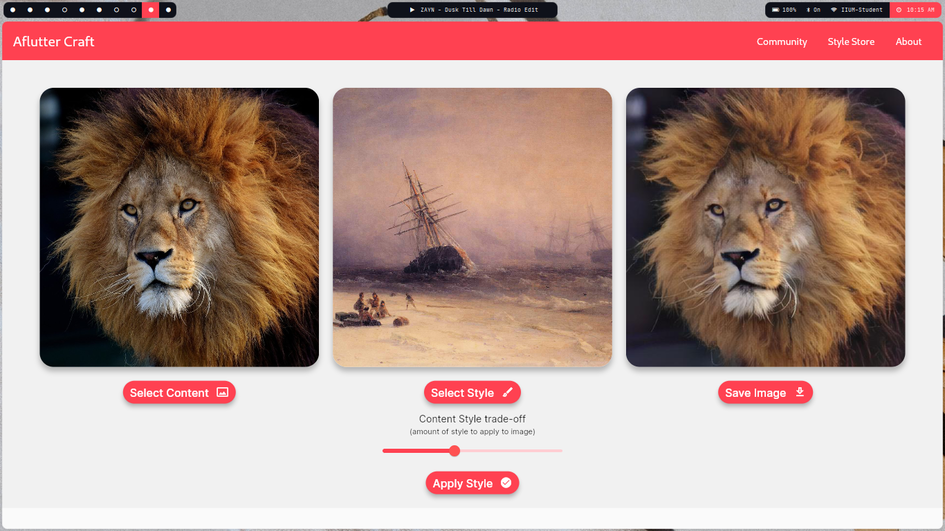

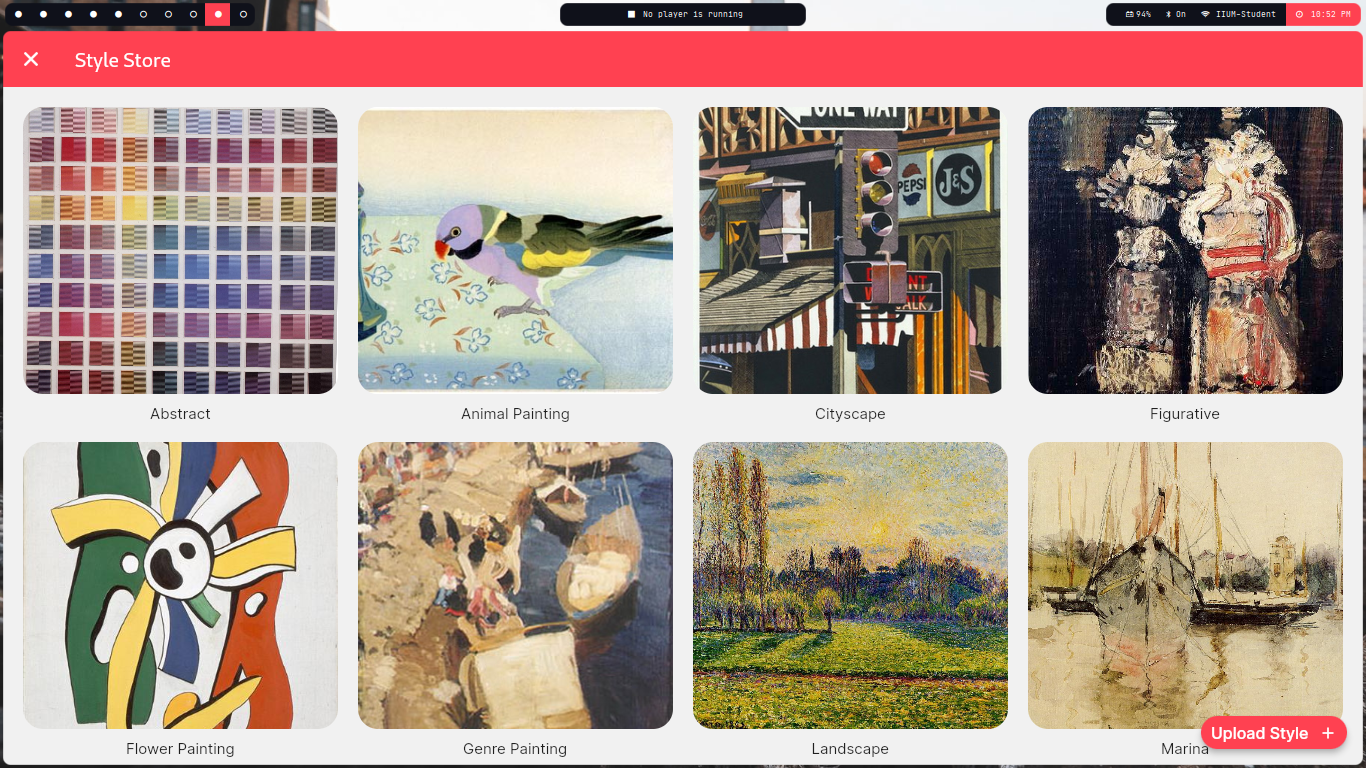

screenshots

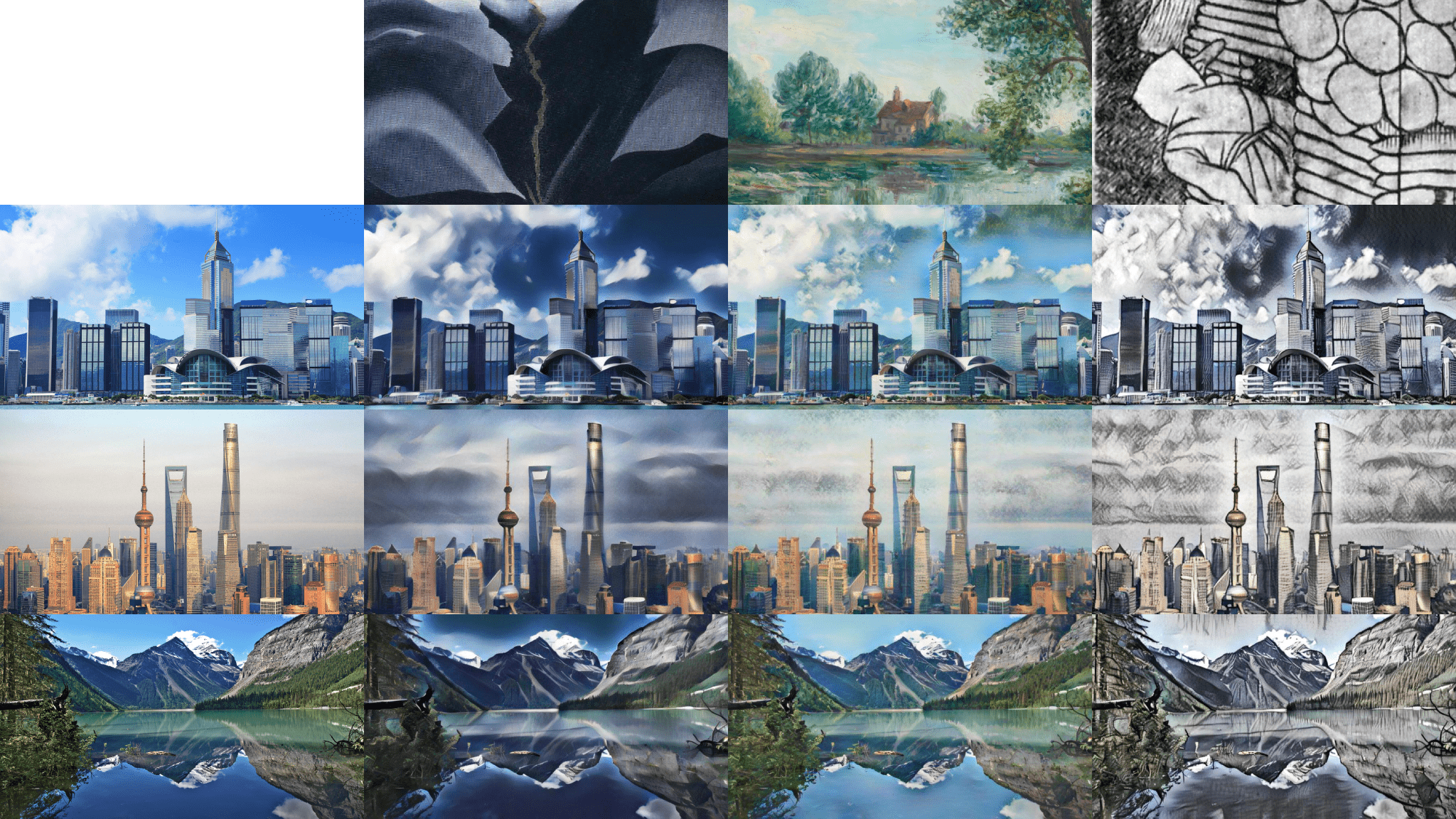

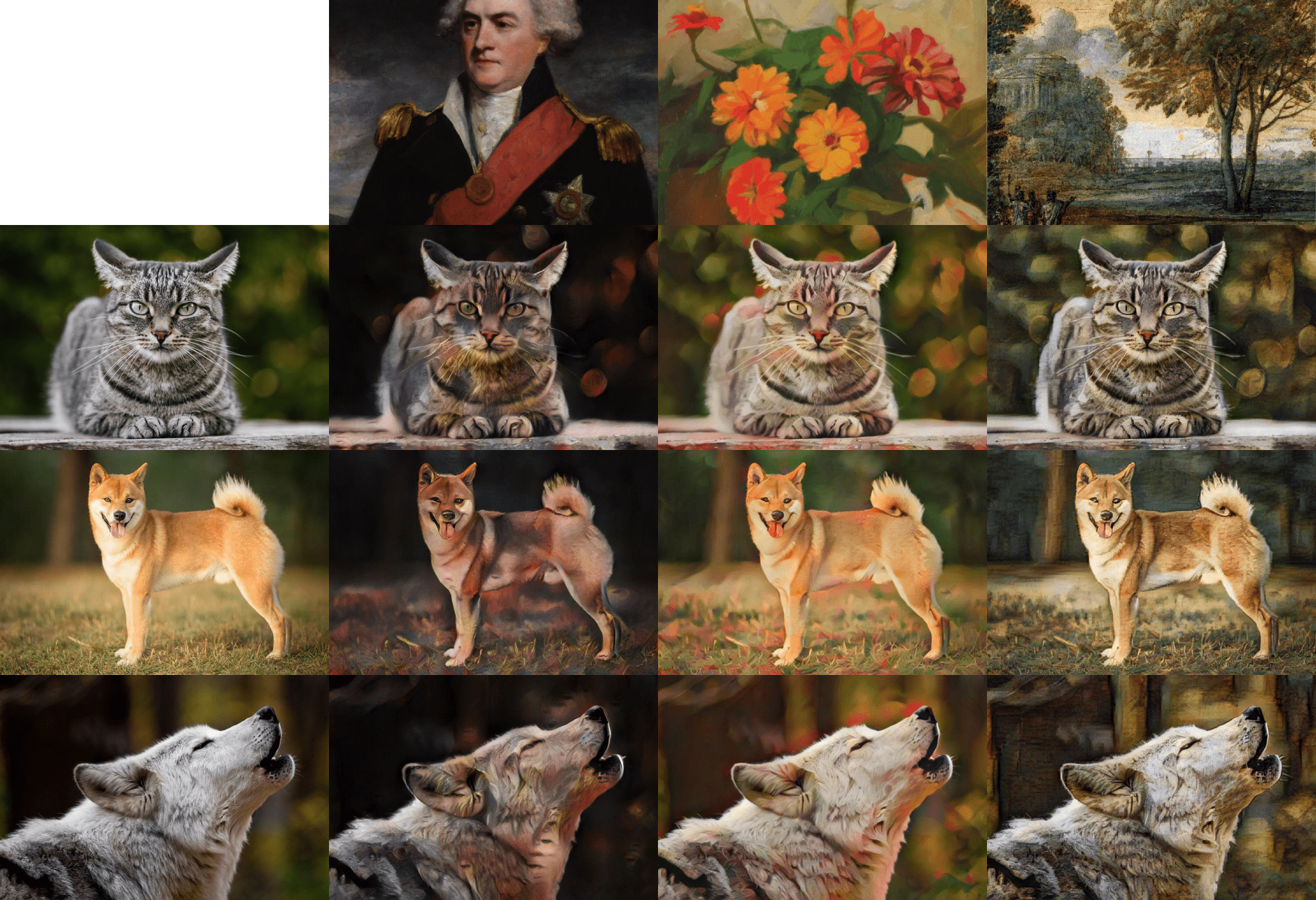

Example Results

Abstract (Buildings)

Animals

Effects of different Alpha values